思路

基于上一个说的,一起买的策略,用代码实现一下,public LB从 0.0204到0.0215

- 取出最常被一起购买的商品

- 剩下的用补充

代码

import cudf导入数据集

train = cudf.read_csv('../input/h-and-m-personalized-fashion-recommendations/transactions_train.csv')

#为了降低内存占用,id先转换为 int

train['customer_id'] = train['customer_id'].str[-16:].str.hex_to_int().astype('int64')

train['article_id'] = train.article_id.astype('int32')

# 按parquet存一份

train.t_dat = cudf.to_datetime(train.t_dat)

train = train[['t_dat','customer_id','article_id']]

train.to_parquet('train.pqt',index=False)

print( train.shape )

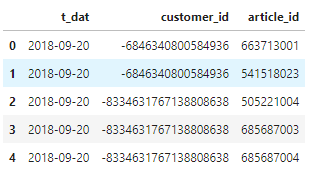

train.head()(31788324, 3)

只要和最后一笔交易差距小于6天的交易记录

# 最后交易的日期

tmp = train.groupby('customer_id').t_dat.max().reset_index()

tmp.columns = ['customer_id','max_dat']

train = train.merge(tmp,on=['customer_id'],how='left')

# 这笔交易和最后交易的日期差了多少天

train['diff_dat'] = (train.max_dat - train.t_dat).dt.days

# 如果超过6天就不要了

train = train.loc[train['diff_dat']<=6]

print('Train shape:',train.shape)Train shape: (5181535, 5)计算出customer买了多少次该产品

# 计算出customer买了多少次该产品

tmp = train.groupby(['customer_id','article_id'])['t_dat'].agg('count').reset_index()

tmp.columns = ['customer_id','article_id','ct']

# 按照交易数量和交易时间倒序,这也是为了后面连成字符串的时候,交易数量越高,越近的article越靠前

train = train.merge(tmp,on=['customer_id','article_id'],how='left')

train = train.sort_values(['ct','t_dat'],ascending=False)

train = train.drop_duplicates(['customer_id','article_id'])

train = train.sort_values(['ct','t_dat'],ascending=False)

train.head()pair_cudf.npy是一个map,把一个article_id映射为另一个最常一起购买的article_id

import pandas as pd, numpy as np

train = train.to_pandas()

pairs = np.load('../input/hmitempairs/pairs_cudf.npy',allow_pickle=True).item()

train['article_id2'] = train.article_id.map(pairs)train2为针对每个交易的article,把它映射为最频繁的id

train2 = train[['customer_id','article_id2']].copy()

train2 = train2.loc[train2.article_id2.notnull()]

train2 = train2.drop_duplicates(['customer_id','article_id2'])

train2 = train2.rename({'article_id2':'article_id'},axis=1)把train和train2结合到一起(包含过去买过的和最相关的),去掉重复值(可能会有多条一样的)

train = train[['customer_id','article_id']]

train = pd.concat([train,train2],axis=0,ignore_index=True)

train.article_id = train.article_id.astype('int32')

train = train.drop_duplicates(['customer_id','article_id'])把多个article id转换为字符串

train.article_id = ' 0' + train.article_id.astype('str')

preds = cudf.DataFrame( train.groupby('customer_id').article_id.sum().reset_index() )

preds.columns = ['customer_id','prediction']

preds.head()取出上一周最火的12个article

train = cudf.read_parquet('train.pqt')

train.t_dat = cudf.to_datetime(train.t_dat)

train = train.loc[train.t_dat >= cudf.to_datetime('2020-09-16')]

top12 = ' 0' + ' 0'.join(train.article_id.value_counts().to_pandas().index.astype('str')[:12])

print("Last week's top 12 popular items:")

print( top12 )Last week's top 12 popular items:

0924243001 0924243002 0918522001 0923758001 0866731001 0909370001 0751471001 0915529003 0915529005 0448509014 0762846027 0714790020写submission

sub = cudf.read_csv('../input/h-and-m-personalized-fashion-recommendations/sample_submission.csv')

sub = sub[['customer_id']]

sub['customer_id_2'] = sub['customer_id'].str[-16:].str.hex_to_int().astype('int64')

sub = sub.merge(preds.rename({'customer_id':'customer_id_2'},axis=1),\

on='customer_id_2', how='left').fillna('')

del sub['customer_id_2']把预测结果和top12加到一起

sub.prediction = sub.prediction + top12只留12个,保存预测结果。

# 去掉左右空格,只取前131个: 12x10+11个空格=131

sub.prediction = sub.prediction.str.strip()

sub.prediction = sub.prediction.str[:131]

sub.to_csv(f'submission.csv',index=False)

sub.head()最终public LB为0.0215