什么是hyeropt?

hyperopt 是一个调超参数的python库,用贝叶斯方法来找到损失函数最小的超参。

超参优化的大体过程

优化过程主要包括下面4个部分

- 设定搜索区域

- 定义损失函数:比如要最大化准确率,那么就把准确率的负值作为损失函数

- 优化算法

优化算法决定下一个迭代要选择的超参值

- 优化结果

优化结果指的是pair列表,<超参组合,损失值>

导入库和数据集

导入需要的python库

import pandas as pd

import numpy as np

import xgboost as xgb

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

# 超参数调整

from hyperopt import STATUS_OK, Trials, fmin, hp, tpe

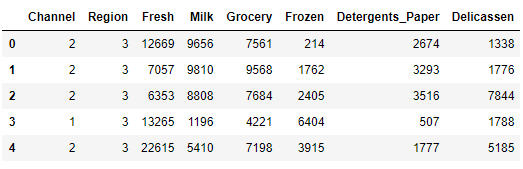

import os导入数据集,数据下载地址:Wholesale customers data.csv

data = 'https://bastudypic.oss-cn-hongkong.aliyuncs.com/datasets/Wholesale%20customers%20data.csv'

df = pd.read_csv(data)

df.head()

我们要预测的target是Channel,有两种取值,1或2

X = df.drop('Channel', axis=1)

y = df['Channel']

y[y == 2] = 0

y[y == 1] = 1分割数据集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.3, random_state = 0)Hyperopt贝叶斯优化

设定搜索空间

space={'max_depth': hp.quniform("max_depth", 3, 18, 1),

'gamma': hp.uniform ('gamma', 1,9),

'reg_alpha' : hp.quniform('reg_alpha', 40,180,1),

'reg_lambda' : hp.uniform('reg_lambda', 0,1),

'colsample_bytree' : hp.uniform('colsample_bytree', 0.5,1),

'min_child_weight' : hp.quniform('min_child_weight', 0, 10, 1),

'n_estimators': 180,

'seed': 0

}hyperopt常用的优化算法包括:

hp.choice(label, options)— 返回的是options中的一个选项,options必须是list或者tuplehp.randint(label, upper)— 返回的是一个$[0, upper)$中的一个随机整数hp.uniform(label, low, high)— 返回的是low和high之间的一个随机值,平均分布hp.quniform(label, low, high, q)— 返回的是 $\text{round}(\text{uniform}(\text{low}, \text{high) }/ q) \times q$, 会先取整再乘回$q$hp.normal(label, mean, std)— 返回的是均值为$mean$,标准差为$std$的正态分布

设定objective函数

def objective(space):

clf=xgb.XGBClassifier(

n_estimators =space['n_estimators'], max_depth = int(space['max_depth']), gamma = space['gamma'],

reg_alpha = int(space['reg_alpha']),min_child_weight=int(space['min_child_weight']),

colsample_bytree=int(space['colsample_bytree']),

use_label_encoder=False)

evaluation = [( X_train, y_train), ( X_test, y_test)]

clf.fit(X_train, y_train,

eval_set=evaluation, eval_metric="auc",

early_stopping_rounds=10,verbose=False)

pred = clf.predict(X_test)

accuracy = accuracy_score(y_test, pred>0.5)

print ("SCORE:", accuracy)

return {'loss': -accuracy, 'status': STATUS_OK }优化算法

fmin负责在space中寻找fn的函数返回值最小,采用tpe.suggest(tree of Parzen estimators)算法,尝试max_evals,最终得到最优的超参

trials = Trials()

best_hyperparams = fmin(fn = objective,

space = space,

algo = tpe.suggest,

max_evals = 100,

trials = trials)SCORE:

0.3484848484848485

SCORE:

0.3484848484848485

...

SCORE:

0.8712121212121212

SCORE:

0.6515151515151515

100%|██████████| 100/100 [00:03<00:00, 31.03trial/s, best loss: -0.8712121212121212]best_hyperparams{'colsample_bytree': 0.9325751775404758,

'gamma': 4.591909715645454,

'max_depth': 7.0,

'min_child_weight': 1.0,

'reg_alpha': 47.0,

'reg_lambda': 0.5259245499862452}交叉验证

from xgboost import cv

int_params = ['max_depth', 'min_child_weight']

for int_param in int_params:

best_hyperparams[int_param] = int(best_hyperparams[int_param])

best_hyperparams['objective'] = 'binary:logistic'

data_dmatrix = xgb.DMatrix(data=X,label=y)

xgb_cv = cv(dtrain=data_dmatrix, params=best_hyperparams, nfold=4,

num_boost_round=50, early_stopping_rounds=10, metrics="auc", as_pandas=True, seed=3)

xgb_cv.head()

构建模型

clf = xgb.XGBClassifier(**best_hyperparams, use_label_encoder=False)

evaluation = [( X_train, y_train), ( X_test, y_test)]

clf.fit(X_train, y_train,

eval_set=evaluation, eval_metric="auc",

early_stopping_rounds=10,verbose=False)

pred = clf.predict(X_test)

accuracy = accuracy_score(y_test, pred>0.5)特征重要性

clf.feature_importances_array([0. , 0. , 0. , 0.3455514, 0. , 0.6544486,

0. ], dtype=float32)可视化特征ANOVA的F分数

import matplotlib.pyplot as plt

xgb.plot_importance(clf)

plt.figure(figsize = (16, 12))

plt.show()