1 介绍

1.1 什么是Null importance

Null Importance是Kaggle GrandMaster Olivier提出的特征筛选方法。

由于树模型(XGBoost/LightGBM)这种拟合能力很强的模型,通过大量的子树建立关系,把很多和标签无关的feature甚至是噪声都拟合的很好,甚至有可能让噪声超过正常特征,如果根据feature importance直接删除,会删除掉很多潜在的有用信息。

比如把ID特征放进feature,会直接学到ID和样本的映射关系。如果把label打乱,再上面训练,其实ID还会映射到打乱的标签上面。

为了找到这类特征,可以遵循这个逻辑:真正稳定而且重要的特征,肯定是在真标签下的特征很重要,但一旦标签被打乱,这些重要特征的重要性就会变差。反之,如果某个特征在原始标签下表现一般,但打乱标签后,居然重要性上升,明显就不靠谱,需要剔除掉。

Null importances的逻辑是:将特征重要性与随机shuffle后的假label的重要性(即null Importances)做对比,如果特征在假label上预测的分数也很高,甚至null importances比真正的importance还高,就证明这个特征是一个没用的特征。

1.2 具体步骤

- 在真实label上训练,得出feature importance;

- 创建null importances 分布:shuffle label多次,每次在shuffle后的label上训练一个模型。这样可以看出feature和label的不相干程度。

- 对于每个feature,对比真实importance和null importances

1.3 feature importance的比较方法

1.3.1 分位数比较

$$ Feature\space score=\log(10^{-10}+\frac{importance_{真标签}}{1+Q(importance_{假标签},75)}) $$

1.3.2 次数占比比较

$$ Feature\space score=\frac{\sum(importance_{假标签})<Q(importance_{真标签},25)}{n_{importance_{假标签}}}\times 100 $$

正常来说,单个特征只有1个$importance_{真标签}$ ,之所以作者要求25分位数,是考虑到如果使用时,我们也对原始特征反复训练生成多组特征重要性,所以才就加了25分位数。

第二种方法得到的特征分数是在0-100范围内,因此在第二种方法上,可以采用不同的阈值去筛选特征,然后评估模型表现。

2 代码实践

导入所需的库:

import pandas as pd

import numpy as np

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import KFold

import time

from lightgbm import LGBMClassifier

import lightgbm as lgb

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import seaborn as sns

%matplotlib inline

import warnings

warnings.simplefilter('ignore', UserWarning)

import gc

gc.enable()函数:计算Feature importances

def get_feature_importances(data, shuffle, seed=None):

train_features = [f for f in data if f not in ['target', 'id']]

y = data['target'].copy()

if shuffle:

y = data['target'].copy().sample(frac=1.0)

dtrain = lgb.Dataset(data[train_features], y, free_raw_data=False, silent=True)

lgb_params = {

'objective': 'binary',

'boosting_type': 'rf',

'subsample': 0.623,

'colsample_bytree': 0.7,

'num_leaves': 127,

'max_depth': 8,

'seed': seed,

'bagging_freq': 1,

'n_jobs': 4

}

clf = lgb.train(params=lgb_params, train_set=dtrain, num_boost_round=500)

imp_df = pd.DataFrame()

imp_df["feature"] = list(train_features)

imp_df["importance_gain"] = clf.feature_importance(importance_type='gain')

imp_df["importance_split"] = clf.feature_importance(importance_type='split')

imp_df['trn_score'] = roc_auc_score(y, clf.predict(data[train_features]))

return imp_df读取数据

data = pd.read_csv('./train.csv')获取真实标签上的feature importances

np.random.seed(1995)

actual_imp_df = get_feature_importances(data=data, shuffle=False)跑80次,获取null importances

from tqdm import tqdm

null_imp_df = pd.DataFrame()

nb_runs = 80

import time

dsp = ''

for i in tqdm(range(nb_runs)):

start = time.time()

imp_df = get_feature_importances(data=data, shuffle=True)

imp_df['run'] = i + 1

null_imp_df = pd.concat([null_imp_df, imp_df], axis=0)

for l in range(len(dsp)):

print('\b', end='', flush=True)

spent = (time.time() - start) / 60

dsp = 'Done with %4d of %4d (Spent %5.1f min)' % (i + 1, nb_runs, spent)

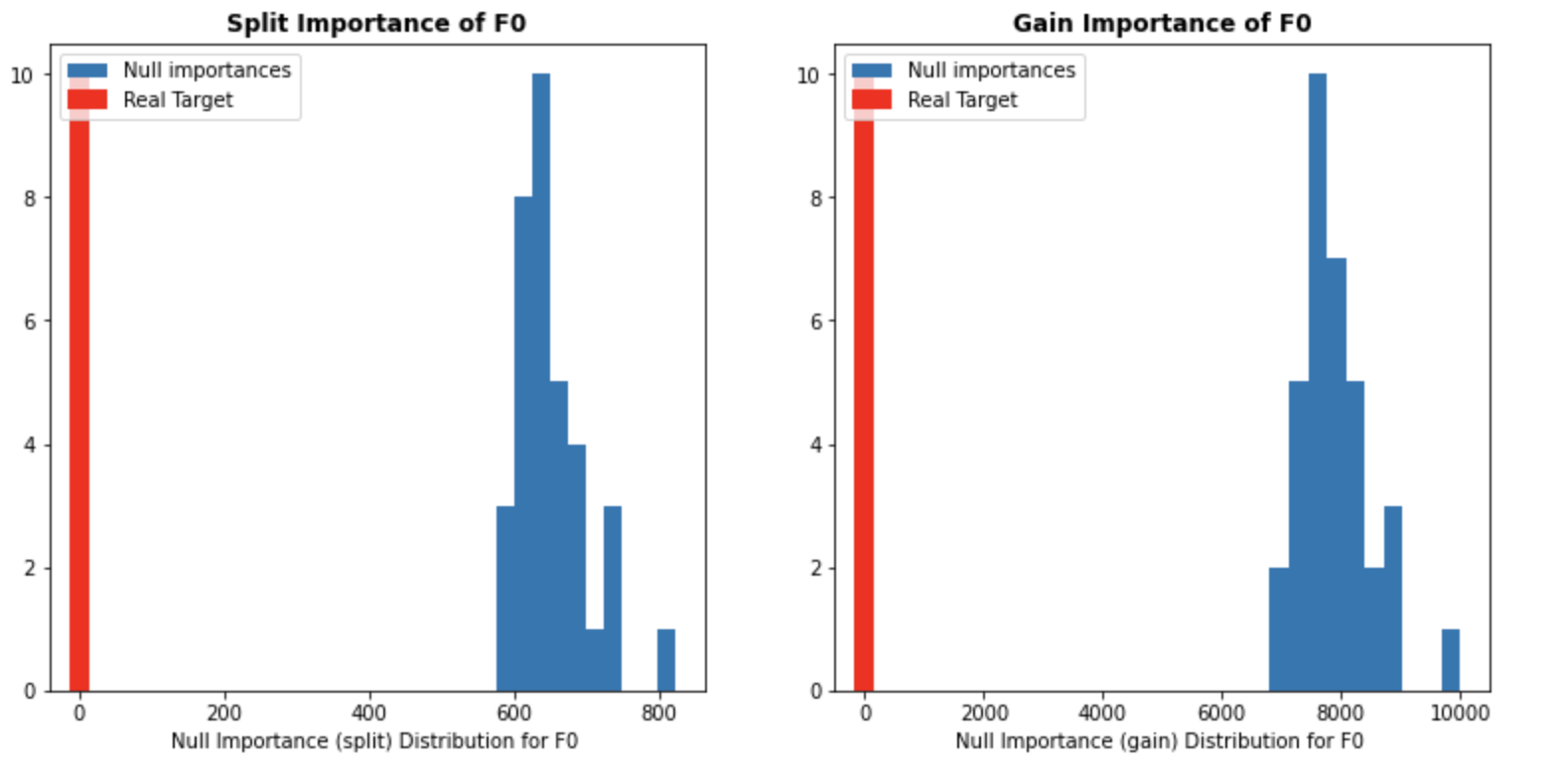

print(dsp, end='', flush=True)显示feature importances分布:

def display_distributions(actual_imp_df_, null_imp_df_, feature_):

plt.figure(figsize=(13, 6))

gs = gridspec.GridSpec(1, 2)

# Plot Split importances

ax = plt.subplot(gs[0, 0])

a = ax.hist(null_imp_df_.loc[null_imp_df_['feature'] == feature_, 'importance_split'].values, label='Null importances')

ax.vlines(x=actual_imp_df_.loc[actual_imp_df_['feature'] == feature_, 'importance_split'].mean(),

ymin=0, ymax=np.max(a[0]), color='r',linewidth=10, label='Real Target')

ax.legend()

ax.set_title('Split Importance of %s' % feature_.upper(), fontweight='bold')

plt.xlabel('Null Importance (split) Distribution for %s ' % feature_.upper())

# Plot Gain importances

ax = plt.subplot(gs[0, 1])

a = ax.hist(null_imp_df_.loc[null_imp_df_['feature'] == feature_, 'importance_gain'].values, label='Null importances')

ax.vlines(x=actual_imp_df_.loc[actual_imp_df_['feature'] == feature_, 'importance_gain'].mean(),

ymin=0, ymax=np.max(a[0]), color='r',linewidth=10, label='Real Target')

ax.legend()

ax.set_title('Gain Importance of %s' % feature_.upper(), fontweight='bold')

plt.xlabel('Null Importance (gain) Distribution for %s ' % feature_.upper())

这里用的是tabular 2021 nov数据集,共$f_0~f_99$100个特征,会输出$100\times 2$个图:

for i in range(100):

plt.figure()

display_distributions(actual_imp_df_=actual_imp_df, null_imp_df_=null_imp_df, feature_=f'f{i}')

第一种比较方法:

feature_scores = []

for _f in actual_imp_df['feature'].unique():

f_null_imps_gain = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_gain'].values

f_act_imps_gain = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_gain'].mean()

gain_score = np.log(1e-10 + f_act_imps_gain / (1 + np.percentile(f_null_imps_gain, 75))) # Avoid didvide by zero

f_null_imps_split = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_split'].values

f_act_imps_split = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_split'].mean()

split_score = np.log(1e-10 + f_act_imps_split / (1 + np.percentile(f_null_imps_split, 75))) # Avoid didvide by zero

feature_scores.append((_f, split_score, gain_score))

scores_df = pd.DataFrame(feature_scores, columns=['feature', 'split_score', 'gain_score'])

plt.figure(figsize=(16, 16))

gs = gridspec.GridSpec(1, 2)

# Plot Split importances

ax = plt.subplot(gs[0, 0])

sns.barplot(x='split_score', y='feature', data=scores_df.sort_values('split_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt split importances', fontweight='bold', fontsize=14)

# Plot Gain importances

ax = plt.subplot(gs[0, 1])

sns.barplot(x='gain_score', y='feature', data=scores_df.sort_values('gain_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt gain importances', fontweight='bold', fontsize=14)

plt.tight_layout()

第二种比较方法:

correlation_scores = []

for _f in actual_imp_df['feature'].unique():

f_null_imps = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_gain'].values

f_act_imps = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_gain'].values

gain_score = 100 * (f_null_imps < np.percentile(f_act_imps, 25)).sum() / f_null_imps.size

f_null_imps = null_imp_df.loc[null_imp_df['feature'] == _f, 'importance_split'].values

f_act_imps = actual_imp_df.loc[actual_imp_df['feature'] == _f, 'importance_split'].values

split_score = 100 * (f_null_imps < np.percentile(f_act_imps, 25)).sum() / f_null_imps.size

correlation_scores.append((_f, split_score, gain_score))

corr_scores_df = pd.DataFrame(correlation_scores, columns=['feature', 'split_score', 'gain_score'])

fig = plt.figure(figsize=(16, 16))

gs = gridspec.GridSpec(1, 2)

# Plot Split importances

ax = plt.subplot(gs[0, 0])

sns.barplot(x='split_score', y='feature', data=corr_scores_df.sort_values('split_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt split importances', fontweight='bold', fontsize=14)

# Plot Gain importances

ax = plt.subplot(gs[0, 1])

sns.barplot(x='gain_score', y='feature', data=corr_scores_df.sort_values('gain_score', ascending=False).iloc[0:70], ax=ax)

ax.set_title('Feature scores wrt gain importances', fontweight='bold', fontsize=14)

plt.tight_layout()

plt.suptitle("Features' split and gain scores", fontweight='bold', fontsize=16)

fig.subplots_adjust(top=0.93)

第二种方法,根据不同阈值删除特征,计算模型的性能:

def score_feature_selection(df=None, train_features=None, cat_feats=None, target=None):

# Fit LightGBM

dtrain = lgb.Dataset(df[train_features], target, free_raw_data=False, silent=True)

lgb_params = {

'objective': 'binary',

'boosting_type': 'gbdt',

'learning_rate': .1,

'subsample': 0.8,

'colsample_bytree': 0.8,

'num_leaves': 31,

'max_depth': -1,

'seed': 13,

'n_jobs': 4,

'min_split_gain': .00001,

'reg_alpha': .00001,

'reg_lambda': .00001,

'metric': 'auc'

}

# Fit the model

hist = lgb.cv(

params=lgb_params,

train_set=dtrain,

num_boost_round=2000,

categorical_feature=cat_feats,

nfold=5,

stratified=True,

shuffle=True,

early_stopping_rounds=50,

verbose_eval=0,

seed=17

)

# Return the last mean / std values

return hist['auc-mean'][-1], hist['auc-stdv'][-1]

# features = [f for f in data.columns if f not in ['SK_ID_CURR', 'TARGET']]

# score_feature_selection(df=data[features], train_features=features, target=data['TARGET'])

for threshold in [0, 10, 20, 30 , 40, 50 ,60 , 70, 80 , 90, 95, 99]:

split_feats = [_f for _f, _score, _ in correlation_scores if _score >= threshold]

split_cat_feats = [_f for _f, _score, _ in correlation_scores if (_score >= threshold) ]

gain_feats = [_f for _f, _, _score in correlation_scores if _score >= threshold]

gain_cat_feats = [_f for _f, _, _score in correlation_scores if (_score >= threshold) ]

print('Results for threshold %3d' % threshold)

split_results = score_feature_selection(df=data, train_features=split_feats, cat_feats=split_cat_feats, target=data['target'])

print('\t SPLIT : %.6f +/- %.6f' % (split_results[0], split_results[1]))

gain_results = score_feature_selection(df=data, train_features=gain_feats, cat_feats=gain_cat_feats, target=data['target'])

print('\t GAIN : %.6f +/- %.6f' % (gain_results[0], gain_results[1]))