Santander Customer Transaction Prediction

1 比赛介绍

Santander比赛是要预测一个客户是否会执行交易(不考虑交易价格)。

衡量标准使用AUC,提交文件是二元分类

ID_code,target

test_0,0

test_1,1

test_2,0

...这个比赛是两年前参加的,只在前期参加了一下,当时的private LB是0.90,后来很多人找到了magic,LB到了0.92,后面我会结合这些magic重新跑一下,看看会达到什么程度。

2 基础 EDA

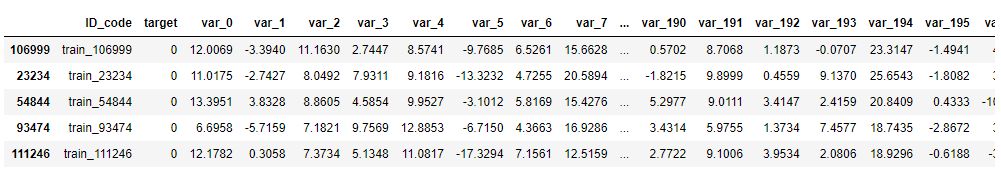

要预测的是target,一共200个特征

import numpy as np

import pandas as pd

train = pd.read_csv('../input/train.csv')

test = pd.read_csv('../input/test.csv')

train.sample(5)

训练集和验证集都有20万个样本

len(train), len(test)(200000, 200000)大约有$10\%$的正样本

train['target'].value_counts() / len(train)0 0.89951

1 0.10049

Name: target, dtype: float643 magic的思路

3.1 Frequency

生成特征:每个特征的频率。

3.2 发现生成的样本

在3.1中,使用的计算频率的方法,一般会把训练集和测试集的数据放在一起来计算。

但是,在这个比赛里,如果直接把测试集拿来算频率,模型的效果会受到影响,因为有很多样本是生成的。这些生成的样本,会验证影响频率的计算。

推测一下,由于Kaggle有public和private两个leaderboard,Santander为了公平,在public leaderboard里面加了很多生成样本来混淆视听,最终private leaderboard中只计算真实样本的。

为了证明这一点,首先看一下训练集和测试集中,unique特征的比例的区别:

train.nunique() / len(train)ID_code 1.000000

target 0.000010

var_0 0.473360

var_1 0.544660

var_2 0.432775

var_3 0.372985

var_4 0.317575

var_5 0.705145

...

var_195 0.289350

var_196 0.627800

var_197 0.202685

var_198 0.470765

var_199 0.747150

Length: 202, dtype: float64test.nunique() / len(test)ID_code 1.000000

var_0 0.327900

var_1 0.358305

var_2 0.309325

var_3 0.282535

var_4 0.249975

var_5 0.416140

...

var_195 0.232410

var_196 0.390190

var_197 0.174085

var_198 0.326310

var_199 0.429665

Length: 201, dtype: float64结果很明显,比例是不同的,在测试集中,unique数据的比例更小。

所以,我后面根据YAG320 的方法,先找到生成的样本,之后再计算真实样本的频率。

3.3 200个model做的Naive Bayes

每个模型是在一个原始feature+对应的频率feature上训练的LightGBM。有个帖子说特征之间是独立的,所以可以用NB。

部分链接:https://www.kaggle.com/b5strbal/lightgbm-naive-bayes-santander-0-900

4 找到合成样本

import numpy as np

import pandas as pd

from tqdm import tqdm_notebook as tqdm

import osprint(os.listdir("../input"))['test.csv', 'train.csv']对每个feature查找unique

test_path = '../input/test.csv'

df_test = pd.read_csv(test_path)

df_test.drop(['ID_code'], axis=1, inplace=True)

df_test = df_test.values

unique_samples = []

unique_count = np.zeros_like(df_test)

for feature in tqdm(range(df_test.shape[1])):

# count_:同样值的样本数量

_, index_, count_ = np.unique(df_test[:, feature], return_counts=True, return_index=True)

unique_count[index_[count_ == 1], feature] += 1

# 至少有一个feature是唯一的样本的index

real_samples_indexes = np.argwhere(np.sum(unique_count, axis=1) > 0)[:, 0]

# 一个唯一feature都没有的样本的id

synthetic_samples_indexes = np.argwhere(np.sum(unique_count, axis=1) == 0)[:, 0]

print(len(real_samples_indexes))

print(len(synthetic_samples_indexes))为每个合成样本找到对应的generators,generator就是真实样本的

df_test_real = df_test[real_samples_indexes].copy()

generator_for_each_synthetic_sample = []

# 用2万个合成样本就够了,用10万个的结果也是一样的

for cur_sample_index in tqdm(synthetic_samples_indexes[:20000]):

cur_synthetic_sample = df_test[cur_sample_index]

potential_generators = df_test_real == cur_synthetic_sample

# 只要df_test_real的样本,只要有feature里面有一个

# feature和这个合成样本的一样,那么这些就是它的generator,

features_mask = np.sum(potential_generators, axis=0) == 1

verified_generators_mask = np.any(potential_generators[:, features_mask], axis=1)

verified_generators_for_sample = real_samples_indexes[np.argwhere(verified_generators_mask)[:, 0]]

generator_for_each_synthetic_sample.append(set(verified_generators_for_sample))

存储它们的index

public_LB = generator_for_each_synthetic_sample[0]

for x in tqdm(generator_for_each_synthetic_sample):

if public_LB.intersection(x):

public_LB = public_LB.union(x)

private_LB = generator_for_each_synthetic_sample[1]

for x in tqdm(generator_for_each_synthetic_sample):

if private_LB.intersection(x):

private_LB = private_LB.union(x)

print(len(public_LB))

print(len(private_LB))np.save('public_LB', list(public_LB))

np.save('private_LB', list(private_LB))

np.save('synthetic_samples_indexes', list(synthetic_samples_indexes))5 最终notebook讲解

安装需要的库

! pip install xgboost lightgbm catboost -q文件名设置

fname = 'lgb-2021-09-20'导入库

import numpy as np

import pandas as pd

import datetime

from matplotlib import pyplot as plt

%matplotlib inline

import seaborn as sns

from tqdm import tqdm_notebook

import gc

import datetime

from sklearn.metrics import f1_score, log_loss

from sklearn.model_selection import KFold, StratifiedKFold

from sklearn.decomposition import PCA

import xgboost as xgb

import lightgbm as lgb

from scipy.stats import hmean, gmean

from scipy.special import expit, logit

pd.options.display.max_rows = 100

pd.options.display.max_colwidth = 100

pd.options.display.max_columns = 100

import pickle as pklfast_auc是用来加速AUC计算

import numpy as np

from numba import jit

@jit

def fast_auc(y_true, y_prob):

y_true = np.asarray(y_true)

y_true = y_true[np.argsort(y_prob)]

nfalse = 0

auc = 0

n = len(y_true)

for i in range(n):

y_i = y_true[i]

nfalse += (1 - y_i)

auc += y_i * nfalse

auc /= (nfalse * (n - nfalse))

return auc

def eval_auc (preds, dtrain):

labels = dtrain.get_label()

return 'auc', fast_auc(labels, preds), Trueget_importances:获取importanceget_ranks:计算对应预测值的排名cross_validate_lgb:lgb交叉验证

def get_importances(clfs):

# 根据gain来计算每个模型的feature_importance

importances = [clf.feature_importance('gain') for clf in clfs]

# [array([..]), array([..])]转换为array([[..], [..]])

importances = np.vstack(importances)

# 对每个模型取平均值

mean_gain = np.mean(importances, axis=0)

# 特征名

features = clfs[0].feature_name()

# 建立pandas DataFrame

data = pd.DataFrame({'gain':mean_gain, 'feature':features})

# 可视化

plt.figure(figsize=(8, 30))

sns.barplot(x='gain', y='feature', data=data.sort_values('gain', ascending=False))

plt.tight_layout()

return data

def get_ranks(y_pred):

# 返回的是对应预测值的排名

return pd.Series(y_pred).rank().values

# nseed:随机种子的数量

def cross_validate_lgb(param, x_train, y_train, x_test, kf,

num_boost_round,

fname, nseed=1, verbose_eval=1,

target='target', featimp=False, save_pred=False,

use_auc=True,

early_stopping_rounds=150,

use_all_folds=True,

):

# 如果保存预测结果,会自动加个时间戳并保存

if save_pred:

now = datetime.datetime.now()

now = str(now.strftime('%Y-%m-%d-%H-%M-%S'))

print('started at:', now)

print('num bagging seeds:', nseed)

fname = '../submissions/'+fname+'_'+now

print(x_train.shape, x_test.shape)

# 显示折数

nfold = kf.n_splits

print('nfold', nfold)

# 验证集和预测集的结果,

val_pred = np.zeros((x_train.shape[0], nseed))

test_pred = np.zeros((x_test.shape[0], nfold * nseed))

# 特征数量

print('num features:', x_train.shape[1])

# 加速lgb计算

d_train = lgb.Dataset(x_train, label=y_train)

if use_auc:

metric = 'auc'

else:

metric = None

history = lgb.cv(params, d_train, num_boost_round=num_boost_round,

folds=kf, metrics=metric, fobj=None,

early_stopping_rounds=early_stopping_rounds,

verbose_eval=verbose_eval, show_stdv=True, seed=0)

# 找出 best round,作为最终的模型

if use_auc:

best_round = np.argmax(history['auc-mean'])

else:

best_round = np.argmin(history['binary_logloss-mean'])

print('best_round:', best_round)

if best_round == 0:

best_round = 1

# 用来保存最优模型

bsts = []

# oof

for i, (train_index, test_index) in enumerate(kf.split(x_train, y_train)):

print('train fold', i, end=' ')

x_train_kf, x_test_kf = x_train.loc[train_index,:].copy(), x_train.loc[test_index,:].copy()

y_train_kf, y_test_kf = y_train[train_index], y_train[test_index]

d_train_kf = lgb.Dataset(x_train_kf, label=y_train_kf)

d_test_kf = lgb.Dataset(x_test_kf, label=y_test_kf)

train_fold_pred = 0

feval = None

if use_auc:

feval=eval_auc

# 可以选择跑多个seed

for seed in range(nseed):

param['seed'] = seed

# 这里就没有early stopping了,直接用best_round

bst = lgb.train(param, d_train_kf, num_boost_round=best_round,

verbose_eval=None,

early_stopping_rounds=None,

)

bsts.append(bst)

pred = bst.predict(x_test_kf)

val_pred[test_index, seed] = (pred)

pred = bst.predict(x_test)

# 保留oof每个iterate的预测结果

test_pred[:, i*nseed + seed] = (pred)

# train_fold_pred是把每次对训练集预测的结果都加在一起

train_fold_pred = train_fold_pred + bst.predict(x_train_kf)

print('.', end='')

# 求平均值

train_fold_pred /= nseed

#显示performance

print('train auc: %0.5f' % fast_auc(y_train_kf, train_fold_pred), end=' ')

val_fold_pred = np.mean(val_pred[test_index,:], axis=1)

print('val auc: %0.5f' % fast_auc(y_test_kf, val_fold_pred))

# 删除不需要的variable

del d_train_kf

del d_test_kf

gc.collect()

# 如果featimp,就画图

if featimp:

importances = get_importances(bsts)

plt.show()

else:

importances = None

if use_all_folds == False:

break

# 保存raw train和raw predict的结果

print('saving raw train prediction to:', fname+'_train.npy')

np.save(fname+'_train.npy', val_pred)

print('saving raw test prediction to:', fname+'_test.npy')

np.save(fname+'_test.npy', test_pred)

# 平滑系数

epsilon = 1e-6

# 提示:ravel,拉平成1维

# 标签平滑

train_expit = (val_pred + epsilon - val_pred.ravel().min())

test_expit = (test_pred + epsilon - test_pred.ravel().min())

train_expit /= (epsilon + train_expit.ravel().max())

test_expit /= (epsilon + test_expit.ravel().max())

# 取每个seed预测的平均值,计算auc

train_pred_mean = np.mean(train_expit, axis=1)

test_pred_mean = np.mean(test_expit, axis=1)

print('cv mean auc:%0.5f' % fast_auc(y_train, train_pred_mean))

if featimp:

importances = get_importances(bsts)

else:

importances = None

return importances, val_pred, test_pred, bsts, fname读取数据

train = pd.read_csv('../input/train.csv')

test = pd.read_csv('../input/test.csv')

features = train.columns[2:]

x_train = train[features].copy()

y_train = train.target.values

x_test = test[features].copy()

测试集分为public和private的id列表,分出真正的private测试集

public_lb = np.load('../data/public_LB.npy')

private_lb = np.load('../data/private_LB.npy')

real_test = np.concatenate((public_lb, private_lb))

real_test.shape

# 真正的测试集

real_test = x_test.iloc[real_test]计算每个feature的频率,最终数据集会加上200列名为var_xxx_count

count_cols = []

for c in tqdm_notebook(features[:200]):

count_col = c+'_count'

# train+real_test

# index是值,value是频率

tmp = pd.concat((x_train[c], real_test[c])).value_counts()

# 最多统计成6个

x_train[count_col] = x_train[c].map(tmp).clip(0, 6)

# fillna,如果值没在train和real_test里面出现,则出现1次

x_test[count_col] = x_test[c].map(tmp).clip(0, 6).fillna(1)

count_cols.append(count_col)训练lgb模型

params = {

'boosting_type': 'gbdt',

"objective" : "binary",

"learning_rate" : 0.01,

"num_leaves" : 3,

'feature_fraction' : 1,

"bagging_fraction" : 0.8,

"bagging_freq" : 1,

'nthread' : 20,

'bin_construct_sample_cnt' : 1000000,

'max_depth' : 2,

'lambda_l2': 2,

}

num_boost_round = 10000

verbose_eval=1000

early_stopping_rounds = 200

res = []

for c, count in zip(tqdm_notebook(features), count_cols):

fname_var = '_'.join([fname, c])

print('------------------------')

print('training models on:', c)

# 取出对应的特征

x_train_1 = x_train[[c, count]].copy()

y_train = train.target.values

x_test_1 = x_test[[c, count]].copy()

# 可以选择跑多个seed

for seed in range(1):

print('***** seed %d *****' % seed)

kf = StratifiedKFold(5, shuffle=True, random_state=seed)

(importances_reglinear, train_pred_reglinear, test_pred_reglinear, bsts, fname_save

) = cross_validate_lgb(params, x_train_1, y_train, x_test_1, kf,

num_boost_round,

fname_var, nseed=1, verbose_eval=None,

target='target', featimp=False, save_pred=True,

use_auc=False,

early_stopping_rounds=early_stopping_rounds,

use_all_folds=True,

)

# fname_save是每个模型的预测结果

res.append(fname_save)

加载每个预测结果

train_pred = pd.DataFrame()

for col, f in zip(features, res):

train_pred[col] = np.load('%s_train.npy' % f).ravel()

test_pred = pd.DataFrame()

for col, f in zip(features, res):

test_pred[col] = np.load('%s_test.npy' % f).mean(axis=1)计算:$\log\frac{\prod_i p_i(y=1)}{\prod_i p_i(y=0)}=\log\sum_i p_i(y=1) - \log\sum_i p_i(y=0)$

fast_auc(y_train, np.log(train_pred).sum(axis=1) -np.log(1 - train_pred).sum(axis=1))保存submission结果

fname = 'final_lgb'

sub = train[['ID_code']].copy()

sub['target'] = np.log(train_pred).sum(axis=1) -np.log(1 - train_pred).sum(axis=1)

sub.target = sub.target.rank() / 200000

print('cv mean auc:%0.5f' % fast_auc(y_train, sub.target))

print('saving train pred to', '../submissions/%s_train.csv' % fname)

sub.to_csv('../submissions/%s_train.csv' % fname, index=False, float_format='%.6f')

sub = test[['ID_code']].copy()

sub['target'] = np.log(test_pred).sum(axis=1) -np.log(1 - test_pred).sum(axis=1)

sub.target = sub.target.rank() / 200000

print('saving test pred to', '../submissions/%s_test.csv' % fname)

sub.to_csv('../submissions/%s_test.csv' % fname, index=False, float_format='%.6f')

sub.head()