本文是FCN复现系列的第2篇文章,接着FCN复现系列1:数据预处理+数据生成器构造来讲。

上一篇文章中,已经完成了数据生成器的构建,能够为模型提供训练集。这一篇记录模型的构建。

首先导入所需的库

from tensorflow.keras.models import Model, load_model

from tensorflow.keras.layers import Conv2D, Input, MaxPooling2D, concatenate, Dropout,\

Lambda, Conv2DTranspose, Add

from tensorflow.keras import backend as K

from tensorflow.keras.optimizers import Adam

import numpy as np

import tensorflow as tf

import os构建两个函数。

预处理函数,放在模型的第一层,将数据压缩至$[-1, 1]$

$$ x_{new=}2\times(\frac{x}{255}-0.5) $$

def preprocess_input(x):

x /= 255.

x -= 0.5

x *= 2.

return xmetrics计算函数dice,dice指标越接近1,代表分割越准确,公式如下:

$$ intersection=y_{true}\cdot y_{pred} $$

$$ \frac{2\times intersection+smooth}{\sum{y_{true}}+\sum{y_{pred}}+smooth} $$

def dice(y_true, y_pred, smooth=1.):

y_true_f = K.flatten(y_true)

y_pred_f = K.flatten(y_pred)

intersection = K.sum(y_true_f * y_pred_f)

return (2. * intersection + smooth) / (K.sum(y_true_f) + K.sum(y_pred_f) + smooth)# 模型名称

model_name = 'fcn_8'

# 是否加载预训练的模型

pretrained=False

# 图片尺寸设置

imshape = (256, 256, 3)

# 色域

hues = {'star': 30,

'square': 0,

'circle': 90,

'triangle': 60}

# 标签

labels = sorted(hues.keys())

#分类数

n_classes = len(labels) + 1

#图片和标注的路径

image_paths = [os.path.join('FCN-dataset/images', x) for x in sorted_fns('images')]

annot_paths = [os.path.join('FCN-dataset/annotated', x) for x in sorted_fns('annotated')]构建模型:

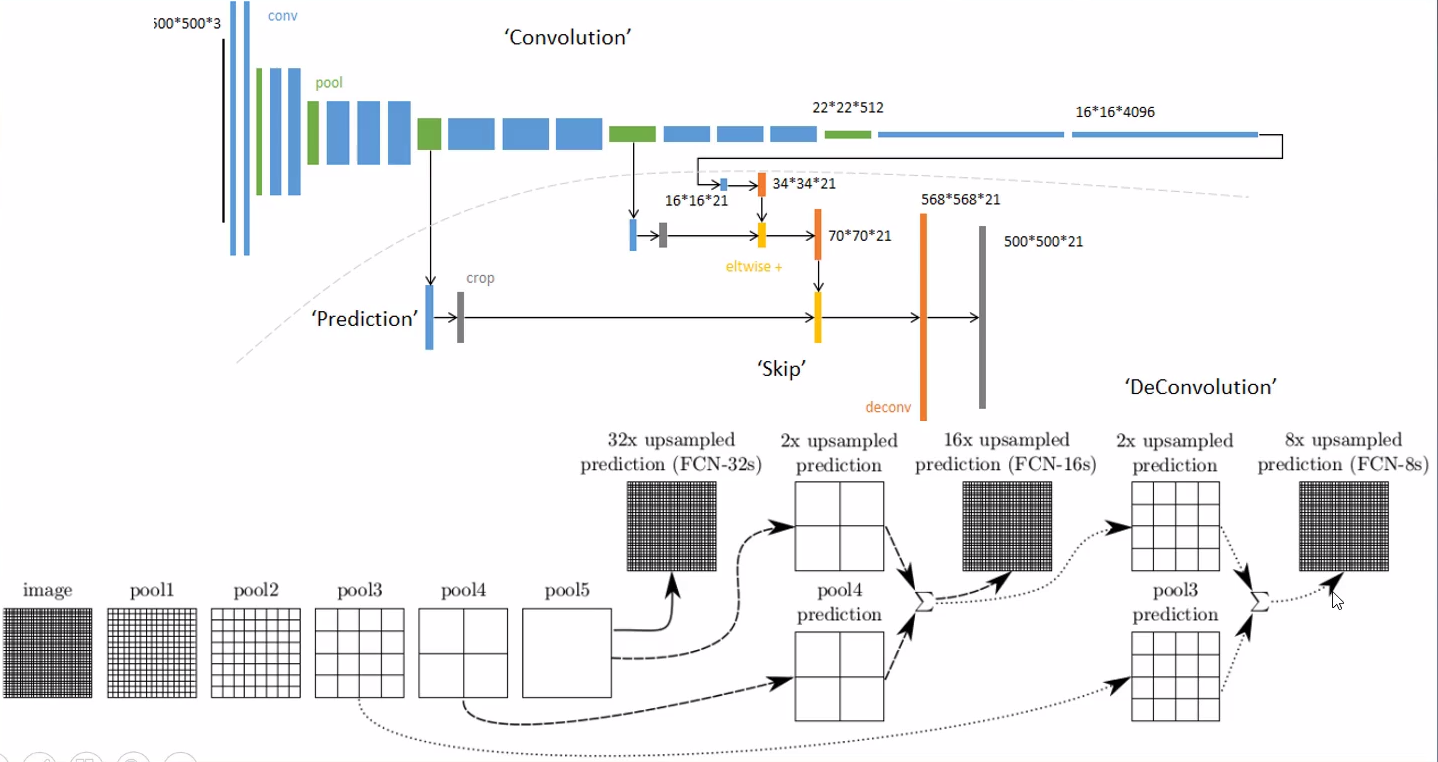

FCN-8的架构图如下。

FCN的思想,就是利用全卷积网络,最终输出为

(height, width, n_classes)来对mask做预测,首先用VGG网络,经过一系列的CNN与Pooling,将其缩小32倍,例如本例中,原图(255,255,3),VGG网络中最后的一个卷积conv7的尺寸是8*8*4096。如果直接对conv7做反卷积放大32倍,最后输出尺寸为

(256,256,n_classes),那么肯定会遗漏很多浅层的细节内容,所以分割效果会比较差。为了让模型能够更好的抓住细节,就需要利用skip connection把浅层网络的输出也用上。

因此,分别用VGG网络中间的pool3

(32, 32, 64)、pool4(16,16,128)来用作skip connection具体步骤:

- 对

pool4做1x1卷积,记为pool4_n,输出为(16,16,n_classes)- 对conv7做反卷积,放大2倍,记为

u2,输出也是(16,16,n_classes)- 将

u2与pool4_n加在一起,记为u2_skip,输出也是(16,16,n_classes)- 对

pool3做1x1卷积,输出为(32,32,5)- 对

u2_skip做反卷积,放大2倍,记为u4,输出也是(32,32,5)- 将

pool3与u4加在一起,记为u4_skip,输出也是(32,32,5)- 最后,对

u4_skip做反卷积,放大8倍,输出是(256,256,5),也就是最终的输出

FCN的代码如下:

b = 4

i = Input(shape=imshape)

s = Lambda(lambda x: preprocess_input(x)) (i)

## Block 1

x = Conv2D(2**b, (3, 3), activation='elu', padding='same', name='block1_conv1')(s)

x = Conv2D(2**b, (3, 3), activation='elu', padding='same', name='block1_conv2')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block1_pool')(x)

f1 = x

# <tf.Tensor 'block1_pool/MaxPool_3:0' shape=(None, 128, 128, 16) dtype=float32>

# Block 2

x = Conv2D(2**(b+1), (3, 3), activation='elu', padding='same', name='block2_conv1')(x)

x = Conv2D(2**(b+1), (3, 3), activation='elu', padding='same', name='block2_conv2')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block2_pool')(x)

f2 = x

# <tf.Tensor 'block2_pool/MaxPool_3:0' shape=(None, 64, 64, 32) dtype=float32>

# Block 3

x = Conv2D(2**(b+2), (3, 3), activation='elu', padding='same', name='block3_conv1')(x)

x = Conv2D(2**(b+2), (3, 3), activation='elu', padding='same', name='block3_conv2')(x)

x = Conv2D(2**(b+2), (3, 3), activation='elu', padding='same', name='block3_conv3')(x)

x = MaxPooling2D((2, 2), strides=(2, 2), name='block3_pool')(x)

pool3 = x

# <tf.Tensor 'block3_pool/MaxPool_3:0' shape=(None, 32, 32, 64) dtype=float32>

# Block 4

x = Conv2D(2**(b+3), (3, 3), activation='elu', padding='same', name='block4_conv1')(x)

x = Conv2D(2**(b+3), (3, 3), activation='elu', padding='same', name='block4_conv2')(x)

x = Conv2D(2**(b+3), (3, 3), activation='elu', padding='same', name='block4_conv3')(x)

pool4 = MaxPooling2D((2, 2), strides=(2, 2), name='block4_pool')(x)

# <tf.Tensor 'block4_pool/MaxPool_3:0' shape=(None, 16, 16, 128) dtype=float32>

# Block 5

x = Conv2D(2**(b+3), (3, 3), activation='elu', padding='same', name='block5_conv1')(pool4)

x = Conv2D(2**(b+3), (3, 3), activation='elu', padding='same', name='block5_conv2')(x)

x = Conv2D(2**(b+3), (3, 3), activation='elu', padding='same', name='block5_conv3')(x)

pool5 = MaxPooling2D((2, 2), strides=(2, 2), name='block5_pool')(x)

# <tf.Tensor 'block5_pool/MaxPool_3:0' shape=(None, 8, 8, 128) dtype=float32>

# 和pool5

conv6 = Conv2D(2048 , (7, 7) , activation='elu' , padding='same', name="conv6")(pool5)

conv6 = Dropout(0.5)(conv6)

conv7 = Conv2D(2048 , (1, 1) , activation='elu' , padding='same', name="conv7")(conv6)

conv7 = Dropout(0.5)(conv7)

# <tf.Tensor 'dropout_7/cond/Identity:0' shape=(None, 8, 8, 2048) dtype=float32>

# pool4的卷积 16*16

pool4_n = Conv2D(n_classes, (1, 1), activation='elu', padding='same')(pool4)

# <tf.Tensor 'conv2d_6/Elu:0' shape=(None, 16, 16, 5) dtype=float32>

# # conv7 upsampling成16*16*5 + poo4

u2 = Conv2DTranspose(n_classes, kernel_size=(2, 2), strides=(2, 2), padding='same')(conv7)

u2_skip = Add()([pool4_n, u2])

# <tf.Tensor 'add_6/add:0' shape=(None, 16, 16, 5) dtype=float32>

pool3_n = Conv2D(n_classes, (1, 1), activation='elu', padding='same')(pool3)

# conv7 + pool4 upsampling + pool3

u4 = Conv2DTranspose(n_classes, kernel_size=(2, 2), strides=(2, 2), padding='same')(u2_skip)

u4_skip = Add()([pool3_n, u4])

# <tf.Tensor 'add_7/add:0' shape=(None, 32, 32, 5) dtype=float32>

# upsampling成256*256

o = Conv2DTranspose(n_classes, kernel_size=(8, 8), strides=(8, 8), padding='same',

activation='softmax')(u4_skip)

model = Model(inputs=i, outputs=o, name='fcn_multi')

model.compile(optimizer=Adam(1e-4),

loss='categorical_crossentropy',

metrics=[dice])

# model.summary()最终,利用上一篇文章中的DataGenerator,来做训练。

dataGenerator = DataGenerator(image_paths, annot_paths)

model.fit_generator(generator=dataGenerator,

steps_per_epoch=len(dataGenerator),

epochs=500, verbose=1,

)